by Gene Turnbow | Nov 4, 2025 | Books, Writing

I keep hearing this trope about how your characters speak to you and have minds of their own. I never understood this.

As a writer, I either know what my characters should be doing, and where my story is supposed to be going and why, and the emotional notes I want to hit and why, and then I make them do what I want.

And If I can’t, it means I haven’t thought things through properly, or worked out enough of the back story for my characters, so I go back and do the missing work. I’m never surprised by what my characters do in my stories. They are as I write them.

by Gene Turnbow | Oct 11, 2025 | Books, Business, Writing

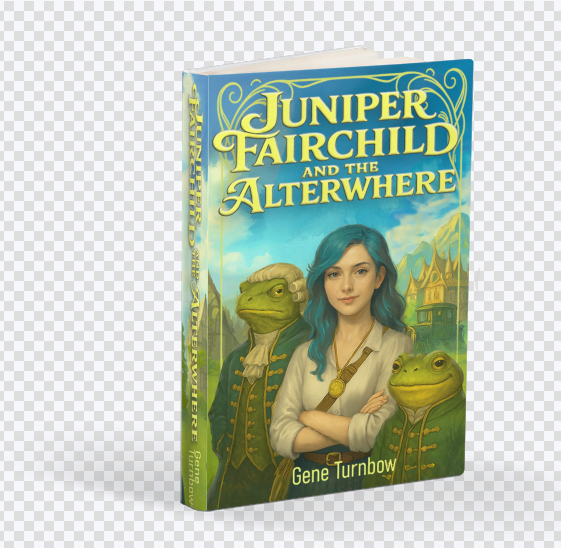

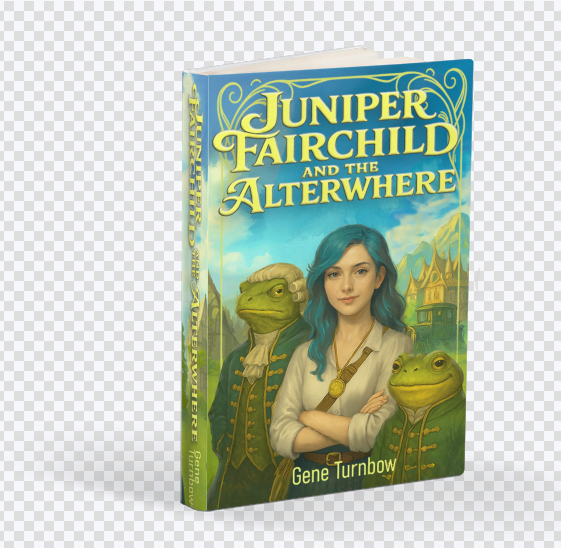

This is just sort of a grab bag of stuff I learned while publishing my first book, Juniper Fairchild and the Alterwhere. This advice applies to both indie authors and operators of small press.

Know Your Genre. Read.

Read everything. It’s great if you know the classics, but read the new stuff. Learn how to build your stories so that they fit in the formats your anticipated audience will expect to see.

Do not write a 600 page doorstop as your first book. You can do that later if you get popular. Likewise, do not write a pamphlet and present it as a book and expect to be taken seriously.

Organize Everything

People who say they write by the seat of their pants aren’t telling you the whole truth: sooner or later you have to make an outline, and you have to fit what you’ve written into that outline, or you haven’t got a book. It’s better to plan things out, then write. Authors who can write straight ahead are those who are so skilled that they can do the planning part in their heads without writing it down. You are not one of those writers.

ISBN Numbers

You should buy a batch of ISBN numbers from Bowker. Don’t buy them one at a time. Each version of your book will need a separate one, and by the time you’ve used three, you might as well have bought the ten pack.

Do not buy ISBN numbers from anyone else. They’re lying to you when they say they can sell you one. ISBN numbes are not transferrable, and Bowker is the only source.

Do NOT pay the extra $25 for a barcode from Bowker. Bar codes are automatically generated by both KDP and Ingram Spark and applied to your book cover for you, so you never have to fuss with it, and you get those bar codes for free.

If the only place you will ever publish your book is Amazon, then you do not need an ISBN number at all, they can supply you with one (but because they actually own that ISBN number and not you, you cannot use the ISBN number you get from them anywhere but on Amazon).

If you’re Canadian, don’t buy your ISBN number from anybody. Get an account with Library & Archives Canada, which will issue you a batch of ISBN numbers for free. Follow this link to find out what you need to know.

Copyrights

File for a copyright for your work. It only costs $45, and you can’t necessarily rely on your publisher to do it for you, even if they’re contractually obligated to do it, because publishers are human at best, and people forget things. If you’re publishing your own work, there’s no excuse not to. If you run your own publishing company, encourage your authors to do it themselves and show them how. Authors owning their own copyright is a powerful thing.

Library of Congress Numbers (LCCN) – Getting into Libraries

To get your book into libraries, you’ll likely want to take the extra step of getting your book registered with the Library of Congress, and that means sending them two physical copies of your book. In return, you get an LCCN, a Library of Congress Control Number. That number, when used by other libraries, provides access to other important information about your book.

Do you have to have an LCCN to publish? No.

Can you get into bookstores without one? Yes.

However, all the best books, and every book published by the Big Five, have LCCN’s. If you want to be taken seriously, an LCCN is advised, and people expect to see it on your copyright page. Your opinion on this may differ from mine, but I think it skipping this would be like going to a dinner party in a tuxedo jacket and bermuda shorts, or in a cocktail dress and beach sandals.

Getting Into Bookstores

It’s easier than you think. You just have to go through all the steps.

If you want to get your book into libraries and bookstores, you will have to publish either through Barnes & Noble Press, or Ingram Spark. There is no point in doing both. Either of them will ask you for an ISBN number and will not provide one to you.

Once you are listed on either of these services, though, any bookstore in the world and any library in the world will be able to order your book. If your book is listed on Ingram Spark, it will automatically also be listed on Barnes & Noble. I walked into a local Barnes & Noble, introduced myself, showed them my book, and they ordered from Barnes & Noble on the spot.

Don’t Do Your Interior Design in Word. Just… don’t.

Don’t submit the interior design of your book as a Word document. I know they all accept that format, but don’t do it. For a good interior design, you will want pixel accurate control. You’re not getting that from a Word document. Use Atticus, or Adobe InDesign to output a PDF file.

Ingram Spark versus Amazon KDP

Both Ingram Spark and Amazon allow you to update both your cover and your content, but only Amazon lets you do that for free after publication. Ingram Spark lets you do it but only up to 60 days after initial submission. After that, they’ll charge you $25 each time you do it. Get it right the first time if you can.

Do not assume that you can upload changes to either service and have those changes propagate to books already in the printing pipeline. They can’t just yank content and replace it on the fly. Printing pipelines are enormous machines, and once you load up the books’ content and press “go”, that’s it. It goes into the machine and comes out as a book. The machine cannot be interrupted or opened while it’s running. Any changes you make will apply to the next batch, not any currently running batches.

I have watched videos where self-published authors have gotten their knickers all atwist because they tried to make last minute changes after orders were already in the pipeline and they did not understand that it wasn’t that Ingram Spark WOULD not help them, it’s that they COULD not help them.

Be aware that KDP uses a different coating on their matte cover stock for the covers than Ingram Spark does. Ingram Spark’s cover coating results in deeper, richer colors. The difference is noticeable, but only when you’re holding examples side by side in your hands. You’ll never notice the difference otherwise.

Wrapup

I hope this list of tips and tricks is useful. I learned it all by trial and error and doing my own research. I was surprised to find that nobody had ever assembled these tips together on a single page before, so may this list short circuit your journey and make your life as a published author somewhat less of a struggle.

–Gene

by Gene Turnbow | Sep 6, 2025 | Books

People like to complain, and when they have trouble with something the first thing they do is try to shift the blame to somebody else. It’s never them. So naturally, if something is a little harder, it tends to attract negative attention.

And so we come to Ingram Spark.

If you’re not aware, Ingram Spark is the single largest, best established, and by far the cheapest print on demand service outside of Amazon KDP itself. They provide a catalog service from which libraries and bookstores can order your books, putting you on the same technical footing as traditional publishers when it comes to distribution. There are other companies that do this, but Ingram Spark is far and away the biggest and most recognized name in publishing, outside of the Big Five publishers.

“The Big Five” in publishing refers to the five largest trade book publishing companies: Penguin Random House, HarperCollins, Simon & Schuster, Hachette Book Group, and Macmillan Publishers.

Now we get to the crux of the complaints: their interface. I read through all the documentation on their web site, and found it straightforward. There were no confusing bits. Everything did what it said it did. If you follow their instructions for formatting, you will have a printable book that you can be proud of. What it does not have is things like extra features that spell-check your manuscript before you hit the submit button, or a previewer right there in their interface that shows you what your book will look like. Instead, they prepare a digital galley proof to show you what your cover and each interior page will look like. You download this once you have all your digital assets uploaded, and it takes them an hour to get back to you with an email showing you where to download it. You open the PDF locally, on your own machine, instead of tying up their server with that task, then you give them either a thumbs-up, “Yes, everything looks fine” or thumbs down, “No, I screwed part of this up, I want a do-over”. It’s missing some of the bells and whistles as compared to the Amazon KDP submission system, but really that’s all. Then they send you another confirmation email so you can double-check your digital galley proof, and you’re ready to go.

They anticipate that if you’re using their service, and you can read and follow instructions, that you will have a successful outcome. That confidence is well placed. There’s nothing broken about their interface. Everything works exactly as described, and the documentation is clear and has no ambiguity whatsoever. My hat is off to whoever maintains their on-line operating manual.

Humans, however, frequently make the error that they are dealing with a person, not a careful architected enterprise grade software interface, and expect them to adapt to variations in user performance and accuracy that just isn’t possible in real world situations.

I honest to gosh believed people were smarter than to think that changes they made were instantaneous across a multi-national company with distribution centers all over the world, but apparently not. Authors have put up indignant videos about it, having not read and understood the instructions, and expecting Ingram Spark to compensate for that on the fly.

Ingram Spark is a huge, huge system, and they expect you to know what you are doing. The secret sauce is to debug everything on KDP, which has a 1-3 day turnaround time on changes (they claim 3-5, but I’ve never had it take longer than one day), and only when you are dead sure you have it right do you upload to Ingram Spark, and even there the cover art will need adjustment because they compute their own templates. Using the KDP ones won’t work, you have to do the cover art over or it will not fit properly.

I have heard tell that the cover art as printed by Ingram Spark can be slightly more saturated than KDP. I’ve ordered a physical proof copy of Juniper Fairchild and the Alterwhere from them to see for myself, but I expect that a production artist used to variations in printing services will be easily able to compensate—and for all that, it’s likely something you’d only be able to see if you had an Ingram Spark edition and a KDP edition side by side in order to make that comparison. Even professionals would not be able to tell the difference without an A/B test.

If you are self-publishing, or you run a small press publishing company like Helium Beach, you’re going to have to get to know Ingram Spark. There are really no other viable options in terms of distribution and unit cost. There are potential pitfalls, but if you just sit down and read the instructions, you’ll be just fine.

Have fun with it.

by Gene Turnbow | Sep 2, 2025 | Writing

… and yet, I know nothing.

I haven’t even learned all the ways there are not to publish a book, but I now have at least a general idea of what I’m doing. I can tell you this much, publishers and agents really do earn their pay. There is a lot to know, and a lot to know to avoid.

For one thing, you can’t launch your book without both TikTok and Instagram. It’s harder to get traction on Instagram, but at least it’s mostly free of scammers trying to sell you author’s services. Apparently this is a thing on TikTok. You’ll get a pile of followers quickly, and that really does help, regardless. However, some non-zero percentage of these people are folks trying to sell you book trailer or fake reviews for your book, neither of which helps you, and both of which will drain your bank account with little to show for it.

The clue, by the way, that you’re talking to a scammer is the number after their name. If they have under a hundred followers, they are very probably not a fellow author. I hadn’t known this when I started out, and got buttonholed by one female author who got me talking about my book, then offered to sell me fake reviews. I told her to shove off. A week later, she contacts me again, under the same login-name but with a different number at the end. What I think is happening is that this author, who looks legit when you look her up online, is actually being spoofed by an illicit organization that uses her name and likeness, and by accident they’ll contact the same potential clients, thereby giving up the game because the second one doesn’t know what the first one talked about with you and repeats the same lame sales pitch.

I also found out that there are a bunch of book review services that are used by bookstores and libraries, but that nearly all of them want to review the book three months prior to press time. If you’re self-publishing, you don’t have three months. The time between a final draft and publication might be as little as one week.

In the world of publishing, things move at glacial speeds. For a major publisher, a three month delay is nothing. For a small press or an independent author, though, that’s a fiscal quarter. Nobody can sit around on a book that long, it’s financial suicide. For the next book I’ll add that into the marketing and distribution plan, but that obviously isn’t happening for Juniper Fairchild and the Alterwhere.

The most cost effective way of getting reviews for your book is through either Goodreads, or through Booksprout. Booksprout lets you substitute cash for the social elbow grease you have to apply to make Goodreads work for you, but it seems to me that Goodreads is probably the better long term plan. It has a social environment Booksprout doesn’t appear to have. and it’s vastly vastly cheaper. Either way, building a mailing list is essential, even a small one will perform better and do you more good than no mailing list at all. I think failure to do this is why so many indie books fail.

I’ve also encountered something interesting. Apparently an average review of 3.5 stars out of 5 is considered decent. I’m having a hard time with this idea. My review ratio is more like 4.75 stars out of 5. My writing style has been compared to those of Neil Gaiman (I know, I know, but remember him as a writer, not a human being with questionable personal values), T. Kingfisher, Terry Pratchett, Lewis Carrol, J.R.R. Tolkien, and L. Frank Baum. Once I step outside my protective bubble of my personal advance review list, I might do more poorly, but people don’t tend to make those specific kinds of remarks unless they mean them. When you say things on the internet, they tend to stick around.

Anyway, the point being that that fellow I found with a 3.5 stars out of 5 review average seems to be selling very well. I’m hopeful that this speaks well of my own potential.

Of course I’m scared to death that it’s all a data mirage, and that I’ve been basing my research on people who were either lying or didn’t have a clue themselves, or both. There’ll be no way to know until release day.

And now we wait.

-30-

by Gene Turnbow | Aug 5, 2025 | Business, Writing

I am not, and have not been, in my thirties for a very long time. That said, I probably don’t have the time to wait around to pummel hundreds of agents with my manuscript until I am “discovered” by one of them. I have therefore decided to take matters into my own hands.

Helium Beach is an imprint I have been operating through Krypton Media Group for about five years now, as of this writing, and I was using Patreon money to buy short stories for publication. Needless to say, this sucks as a business model, so after a couple of years I stopped buying articles. Now, though, Helium Beach comes back into play. I am releasing my first novel, Juniper Fairchild and the Alterwhere, through this imprint, and I have established it with various publishing agencies and companies. The eBook and the paperback release on September 8, 2025.

And now I am swimming through the sudden realization that what I know about social media you could drown in a teacup. The learning curve has been soul shredding, terrifying. Yet, failure is absolutely not an option here, so despite the emotional trauma at having to get my feet back under me at 68 and start a whole new career, here we f**king go.

I’ve learned how to do internal design, cover design, how to work with an editor, the importance of using one, doing endless rewrites, doing my own cover design, and everything else while I keep my radio station running—the radio station, by the way, without which I would have had zero shot at any of this and would have been truly starting from scratch. The station, SCIFI.radio, has a social media reach of about 140,000 pairs of eyeballs due to its presence on five or six social media, and without that springboard I honestly have no idea how well I would be doing as an author. Probably not well.

It’s been exhausting, having to learn all this on top of having to write the book in the first place. I can see why a lot of indie authors never sell more than a dozen copies of their book. I think this just might be the most challenging thing I’ve ever done, not because the work is that hard, but because it’s so alien to how I think. I was raised to believe that if I did good work, people would notice, and I would rise. And that’s not actually how things work at all.

Quantity over Quality

You might find it appalling, but success has more to do with how you twiddle the knobs than the quality of your work. I mean, sure, the quality has to be there at the end, or your sales success will be singular and short-lived, but to begin with, before anybody’s seen your actual work, you can get quite a long way before you actually have to deliver the product.

TikTok and Instagram, for example, are currently considered the platforms for marketing and selling books. Nothing else really moves the needle. Facebook, which I had been relying upon for years, absolutely doesn’t. Yet, both platforms favor videos of a length between seven and fifteen seconds. You’re not doing much selling in seven seconds. You have to fall back on repetition, posting a couple times a day, sometimes for months, before you start to see a benefit. I can tell you, it’s exhausting.

I was just reading about one young lady (whom I will not name, in case she wants to try again and do better next time) who had somehow managed to get 4,900 pre-orders on her book, and then the book released, and all the people who bought it felt cheated because little attention had been paid to preparing the book for publication. It was poorly written, poorly edited (which could have fixed the “poorly written” part,) and contained numerous spelling errors. She got probably a $30,000 payday out of it as a first time author, and yet somehow had managed to run the prerelease guantlet without getting a proper editor in the mix; the point being that it is possible to learn the intracacies of the sales engine without having an actual salesworthy product.

The Actual Path

By this time you can figure out for yourself why indie writers usually fail. It’s not enough to write a great novel. If you’re an indie author, you have to not only write your book, but you’re responsible for all the things your agent would normally do for you, and all the things your publisher would normally do for you. You have no idea what you’re facing until you do it for the first time, nor do you have a clear idea of just how deep this rabbithole goes. The successful self-published author has mastered every single one of these tasks.

And here’s the kicker to all of it. The decision to self-publish might have been the right answer, even if I want to be traditionally published later. An astonishing number of agents now only accept authors with proven sales records and established social media spheres, because they can’t risk gambling on somebody who doesn’t have either one. The publishers are getting this way too. It used to be that the proving ground for new writers was selling short stories to the trade magazines, but they’re all gone now, and that hasn’t been true for probably half a century now. Writing shorts is still a way to polish up your writing skills, but if I’m honest here, writing short stories and writing novels are two completely separate skill sets. Success in one does not require or suggest success in the other.

Anyway, long story short, if you’re an indie author trying to climb to the top, don’t give up. You’re doing it right. There is no slush pile anymore. Indie publishing is the slush pile. Persistence will win the day.

by Gene Turnbow | May 15, 2025 | Uncategorized

I’ve started querying agents. This might take a while.

I keep reading about how long it takes to get an agent, and that much of the time “no” doesn’t mean your book is bad, it just means it doesn’t fit the flow of what they’re doing this year.

And, I have to assume that most people who query don’t even know what a query is supposed to look like but submit anyway, i.e., most people who think they’re writers don’t actually have any idea how the game works (and it is a game). That’s got to be skewing the numbers big time.

When I wanted to get into the FX industry, I just went ahead and did it and ignored the people who said how hard it was or that I would never pull it off. The same thing happened when I went into the game industry, and when I wanted to get into UCLA Film School, and when I wanted to work in feature animation. I did all of them. The lesson I learned is that all the horrible statistics take into account the most wildly stupid and self-destructive applicants in each pursuit who never get past the front door.

Screening applicants for positions at the feature animation studio taught me that for each successful hire, there would be 300-400 applicants, out of which perhaps a dozen might have the basic requirements for the job, and only two or three might actually have everything we were looking for.

That’s only about 3% that make the “I’m not an idiot” cut. And less than one in three of those got hired. Which is FASCINATING, because that’s the same ratio of would-be authors who start a book that go on to see their work published. It doesn’t prove correlation, but it suggests it really f-ing hard.

It also suggests that I’m probably a lot closer to getting published than I think I am. We’ll see.

by Gene Turnbow | May 14, 2025 | Books, Business, Writing

I’ve finished the third draft, and still frankly doing little tweaks here and there. I’ve learned that most agents, if not all of them, request the first three chapters of the book to read when you submit a query, so I’ve been polishing. A word here, a phrase there, suddenly it all seems to have outsized importance. And then, of course, it makes me want to go through the entire book and do that to every chapter.

When I’m alone with my thoughts, when I’m writing the story, that’s the sweetest, most engaging part of the entire process, but now I’m faced with having to do the one thing I’ve never ever been good at, the one thing that terrifies me more than anything else I’ve ever done in my entire life that didn’t involve doing something like playing electric guitar and singing on stage, solo, in front of an auditorium filled with my high school peers and their parents (this was when I was 17), encountering a band of thieves in my own home, led by someone I thought was my best friend (this happened when I was nineteen), or diving in the driver’s side window of my mother’s borrowed car to grab the emergency brake as it was about to dive off a cliff off the end of a pier into the Pacific Ocean (this happened when I was twenty), or having to pull over to blow out an engine fire on my way to work and then get back in the car and drive the rest of the way to work (this happened when I was 40).

I have to sell an agent on the idea of representing my book to a publisher.

I’m terrible at salesmanship. Throughout my life, any time I’ve been confronted with having to do it, it’s always been a horrible experience and I’ve failed at it miserably. But this time I can’t afford to fail, because the rest of my career as a writer depends on my being able to pull just one more miracle out of that dark secret place in the back of my trousers where flying monkeys come from.

I’m doing all the things I think I’m supposed to. I’ve gotten myself an annual membership on QueryTracker, which is a web site meant to help you find agents and keep track of whom you’ve submitted to and what they said, or didn’t say, afterwards. I’m considering entering BookPipeline’s unpublished author’s contest. To be honest, though, I have no idea if that’s a good idea, or if it would help me in the slightest. They don’t even start judging until September, and that seems a very long time from now, and I’m impatient to get started pitching agents.

I’m told that I need to start working on my next book while I work on selling this one, because the publishing industry runs at the speed of books, which is to say, not very speedy at all. Even if I get an agent right away, which isn’t terribly likely, I might see my seventieth birthday before the book is published, assuming it ever gets there. This prospect does not fill me with confidence. I’m sixty-eight now. I don’t want my life to go by while I wait to see if I get to be a real writer. Frankly, the odds aren’t good.

I will tell you something, though. The reason most people fail at getting an agent is that their work isn’t finished before they submit their queries, or they query the wrong agents because they haven’t done any research, or they can’t follow simple instructions given them by the agents. Frequently they just have no idea how writing a novel works, and have written something unreadable, and their books are nowhere near where they need to be to submit. Your book doesn’t have to be in its final, polished, perfect form, but it needs to be as good as you can make it, and it should have been through the hands of a professional editor before you submit (mine now has). The odds of my getting an agent are probably far, far better than I believe they are, because most of the field is just self-disqualifiying.

Now that my manuscript is finished, the real adventure begins.

Wish me luck.

by Gene Turnbow | Apr 26, 2025 | Books, Writing

Now that I’ve gone through the whole book, replaced a chapter I shouldn’t have yanked, and found a bit over 1600 instances of filler phrases and useless sentences to either edit or remove entirely, now the manuscript is in the hands of my editor Lori Alden Holuta.

And I am starting to be faced with the questions of how do I pitch this thing, to whom to I pitch it, and how does the publishing industry work from this point forward. One important web site turns out to be QueryTracker, which is the natural evolution of an industry that is so swamped with people who dearly wish to be writers but don’t quite reach top tier who are looking for agents that there needs to be a service to help agents coordinate it all.

Gone are the days when you could go to a publisher’s office and throw the manuscript into the office over the transom and expect that it might get read someday. The publishing industry is now far far busier than that, and even just the fantasy genre by itself has grown over 40% in the past three years. It’s not just a river of submissions now. It’s a tsunami, made all the worse for people thinking that A.I. can write their books for them. I know publishers who have had to close their submission pipeline entirely while they wade through the sudden oceans of crap that weren’t in the pipeline just three years ago. It’s disheartening.

At the same time, it’s uplifting. Because while it’s harder to stand out than it was, when somebody does actually stumble across my manuscript, it will shine all the brighter. I might actually have a shot at getting agented, and if that happens, I could be published by TOR, or Baen, or DAW, or Dell.

I’m impatient. If this isn’t going to work, I want to know sooner rather than later. That isn’t how the publishing industry works, though. It might take two or three years to learn the fate of my first book, and that means that if I want a career as a published author, I have to start writing the next one whether or not I know that the first one will ever sell. That’s going to be a leap of absolute faith, or hubris, I’m not sure which.

But I’m not giving up, or stopping, or even slowing down, because the only option is to Keep Moving Forward. It might be a long shot, but it’s still my best possible future, and my best possible bet.

I’m taking it.

— Gene

by Gene Turnbow | Apr 10, 2025 | Books

It’s easy to say, “I’m writing a book.” Lots of people say that at parties. It’s hard to actually sit down and write one. This is why people treat the news that you’re working on a book with as much enthusiasm as they do a fart in an airlock, because the world is full of pretenders. And I mean full of them. A great many people want to be a writer, but don’t want to actually write.

And then when you say, “My first draft is finished,” now it’s a matter of both pride and fear. So many people never get that far. I’ve heard estimates that as few as 3% of people who start a book actually finish a first draft.

So, as you might have guessed, I have now finished my first draft, and it’s in the hands of the beta readers. And all I can do now is wait for their feedback, and I hate waiting, of course. And then too, some of the advice I’ll get back will be useful, or even vital, and some of it won’t be. And after I’ve gone through and polished the manuscript based on their recommendations and some of my own revelations, it will be time to either look for an agent, or prep it for publishing via a smaller publishing company that wouldn’t necessarily require me to use one, or self publish.

There are parts of the book, by the way, that ended up being cut because they don’t fit the story, but that will make wonderful short stories or novelettes. They might get included when the book publishes, and they might appear here first as a thank you for your continued support.

Running the radio station while I do this has been an experience as well, and I want to thank you for staying with me while I do all of this, and being with my team as we keep all the little wheels and gears from falling off. The station means a lot to a lot of people, and I don’t know if you’ve checked lately, but we are now the only science fiction themed radio station in the world, and have been for a while. You have earned the right to polish that particular apple, because it’s you and your contributions that make it all possible.

Second draft, here we come.

by Gene Turnbow | Mar 13, 2025 | Technology

A friend once asked me for my opinions on the use of artificial intelligence. I’ve been a big fan of A.I. for most of my life, and it’s been a popular theme in science fiction. But now, we actually have credible A.I. in our social and commercial environment, and it’s time to address the elephant in the room.

Artificial intelligence is a tool, nothing more. One does not condemn a table saw because it can present more teeth to the plywood panel faster than a human could. Such assertions that it is somehow inherently evil are misguided and disingenuous at best.

Generative AI does depend on having been trained by observing the works of artists, and a great many of them. This, however, is also true of human artists, and we do not consider this theft or misappropriation. Those who present this notion as viable apply a double standard. The same is true of the written word. Generative AI learns by observing the work of others. It’s not a copy-paste machine. It does not now, and never has, worked that way, and those who imply that it is somehow “stealing the works of others” clearly do not understand how either artificial intelligence nor human creativity work well enough to make an intelligent comparison.

The areas where generative AI shines are the technical ones, writing code that runs, diagnosing complex networking issues, constructing database applications that perform specific tasks. It can also do miraculous things, like protein folding, and speeding the discovery of new, previously unknown materials.

That said, one does not just lay the wood on the table and press the button, hoping for a replica Louix XIV divan to come out the other side. It’s just a tool. It requires a human being to make the decisions as to where to cut, and why. Artificial intelligence is mostly useless when it comes to creative acts, for it cannot create, except under the express direction of a human being.

Those who rely on generative AI for their writing simply by typing a quick command and pressing a button have removed themselves from the equation, and presenting the output of generative AI as their own without any material guidance, in my view, are charlatans and cheaters. The same is true of those who use pushbutton AI to make images, and then presenting that image as their own work. There is a role for AI in image generation, but deceptively passing it off as one’s own creative work is an unworthy occupation.

The U.S. Copyright Office has clarified its stand on the use of A.I. in creative works. They have said that if A.I. is used as a tool to create elements used in the finished composition, that one may copyright such a work. But if the finished piece was created without the guidance of a human hand, it cannot be copyrighted, for machines may not author anything directly.

I use artificial intelligence when creating graphics, but almost never to create entire images. Instead, I do things like remove people or objects from images, or add missing features. I also use A.I. when coding, because frankly, most of what I have to do is grunt work, and my guidance to the A.I. comes in very precisely defining the task so that I get exactly what I want. It’s like talking to a very literal minded child.

I also use it in my writing, but never to create whole works, only to analyze and to help me organize what I have already written. I have tried, a few times, to have it write things for me, but the results are always mud-dumb, lackluster or outright wrong. And, A.I., no matter how hard you try to set up meta rules to combat this, tends to tell you whatever the hell it thinks you want to hear. This is not useful behavior in a creative, critical environment.

As I see it, the primary ethical concern with ChatGPT and its ilk is the abuse of the service from the standpoint of people trying to take shortcuts with it, or claiming its output as their own. This can range, therefore, from merely being sloppy and lacking in thoroughness to being outright unethical.

Tools, in essence, are tools. There is nothing inherently good or evil about them. It is in no one’s interest to anthropomorphize it and assign it moral or ethical behavior on its own. It is what we make of it, nothing more.

So, take what you want from this view, but remember that you can’t just declare something to be true in defiance of fact or objective proof otherwise. See the situation for what it is, and plan and react accordingly. No other approach makes sense.

– 30 –

Did I make the header image for this article by pushbutton A.I.? I wanted something decent, and there had to be an image there, but I didn’t care much what. So yes. But I’m not claiming this as my artwork. It’s just generative graphics.

Recent Comments